Rethink Your #BizzApps Integrations for Performance Based on Cloud Design Patterns [ENG]

Table of Contents

Introduction

During the last months I have been in contact with a number of Finance and Operations customers facing similar challenges affecting their system’s performance and stability. What do these customers have in common?

- massive data volumes to process and

- data management customizations not designed for performance, either for data processing nor data ingestion, or what we commonly refer as just “Integrations”.

In all cases, performance or throughput bottlenecks were not discovered until it was too late in the implementation project because:

- Performance was not considered when setting business requirements.

- Performance was not considered during the overall architecture and system design (or there was no high-level system design at all).

- Performance was not considered during the development process, that in most cases was approached using obsolete onpremises-focussed monolith design patterns that do not land well in cloud massively distributed environments.

- Proactive testing was never performed until the solution was completely deployed.

That way, customers got a solution that does what was expected to do but (by far, in some cases) was not able to do it within the expected time (sometimes facing regulator fees or system disruptions). What could have be done differently?

When working on business requirements, the ‘what’ is as important as the ‘when’, the ‘how much’ or the ‘how often’. Business requirements must have volume estimations and performance expectations, as these volumes will set a scale, an ultimate goal, for system design, development, and deployment.

With properly sized business requirements, early solution pilots can be quickly developed and tested to validate if the expected throughput can be satisfied before the full investment on having a system completely deployed.

When in doubt, choosing the right tool for each job and fundament your customizations on well-known cloud design patterns will head to a safe start.

One lesson we can extract is that oftentimes technical problems must be faced like people’s problems. Exercise critical thinking, challenge every business requirement that do not improve the business; challenge technical decisions that will create more problems than they solve: when designing a system architecture, change management, active listening and challenging ideas that create artificial complexity are skills that must be actively exercised. Blindly accepting every idea will seriously affect extensibility, scalability and resilience of your solutions in the long term.

But let us see some examples in practice to illustrate what I mean, that hopefully will give you some light if facing similar situations:

Sync vs Async integration calls

In Dynamics F&O (but happened the same previously in Dynamics AX), when we say synchronous calls we most likely refer to web service (REST or SOAP) endpoint calls.

Sync web service calls between isolated systems are problematic because of many reasons, but in this case the main one in my opinion is after setting a sync operation between two systems they are in theory not isolated anymore. Sync calls cause that, in practice, the two systems must be considered one system moving forward in terms of maintenance downtimes, performance expectations and many others, as I will mention later.

Sync calls add pressure on performance as the calling system is waiting for an answer actively, and a delay in the response may lead to timeouts or user-facing errors. The source system can be designed to wait longer, but then what is the point of a sync call? If the system can wait, just use an async call that will make orchestration easier, the entire system more robust and will keep both components truly isolated.

Sync calls expect the two systems involved to scale at the same time. If large data volumes are expected, both the source system must be able to call fast enough and the inbound system is expected to process these messages as fast as they come. A parallelism is created in how the two systems must evolve and react to peaks in data ingestion.Both systems must be designed so they can perform in the worst case. Again, making the two systems interdependent and not isolated anymore. In some edge scenarios, if one of the systems can not afford the workload and collapses, the other one can do nothing and the whole operation may be disrupted. A well designed async integration will continue working if one component fails, even if it goes slower than expected, but will continue working and eventually will finish the work.

In short, sync calls must be avoided when possible (and we must push to make it possible). A sync call must come with a strong business reason to justify the extra long-term cost and complexity it will add to the overall design. Sync calls can be avoided most of the times unless a very quick reaction is expected from our system after an event occurs. As an ERP is unlikely to have such a critical fast-response responsibility, challenge sync integration decisions to the higher possible business level. Remember ‘always async by default’.

Check the Azure Architecture Center:

Inbound integrations

Importing data from external systems (completely external from third-parties, or from other systems withing the organization) is a key part of cloud computing and a critical part of system architecture design for proper scale.

All the customers I was referring to in the introduction have massive inbound integrations supporting critical business process (posting invoices, ledger journals, purchase orders, closing production orders, …) on different industries: banking, payment processors, manufacturing, retail, … you call it. Most of these integrations were not designed to scale to the levels the business was expecting, what causes a few challenges. In most cases customizations or configurations were limiting the capacity of the standard application to perform well.

Most of them were designed as large atomic sync operations using web services as inbound channel, creating massive database transactions and keeping sessions and transactions open for long periods, significantly affecting performance for other processes and user sessions too.

Database transactions, basic table and index design, or fundamental concurrency mechanism like transaction blocking were completely ignored, heavily limiting Azure SQL capacity to be efficient.

Most of these integrations were file-based, based on the external systems design and using obsolete but widely used technologies in some industries like FTP connections that we were forced to maintain for compatibility reasons.

In other cases, such technologies were part of the original design intentionally; wether the design was created time ago to work on-premises originally or the designers just couldn’t get to a better choice. Sometimes we could replace this with a more modern cloud-oriented file storage service that simplifies operations and improves performance, resilience and security maintenance.

Technical teams did not have a clear and precise understanding about business outcome expectations for these integrations. That led to wrong technical decisions based on unconfirmed assumptions or biases of what the business may be expecting, that at the end proved being wrong. When the business decision makers were challenged with changes to the technical design, they put no problem making the required technical changes to improve throughput and system resilience.

How to design inbound integrations at scale? (standard is fast, don’t be on its way)

When possible, use standard modules like the Data Management Framework to drive your integrations. There may be the need to create a middleware component to face external systems like a custom API or a queue-based load leveling solution, but always consider if the data import/export itself can be ultimately delegated to standard components to improve maintenance and extensibility in the long term.

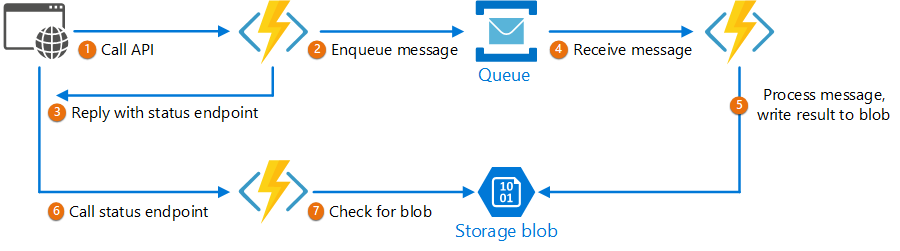

Consider how the system must react when Dynamics is down for maintenance or unavailable for any reason. For example, if F&O goes down the internal web services will be down too, but external Azure components like an Azure Service Bus queue will have a remarkably high uptime instead. Use the benefit of each cloud component to design a robust solution.

How the system will react against sudden and large data volume peaks, like processes that import large daily operations overnight? Can this data be pre-processed by using Azure data solutions (like pipelines in Azure Synapse Analytics, Azure Data Factory, Azure Databricks, …)? This way the volumes reaching Dynamics may be reduced and transformed to a better structure for performance than the original format (i.e., breaking large files into smaller units, creating DMF data packages on the go, …).

Agree with the functional decision makers what is the smallest data set that must be considered atomic (smaller data piece that either get imported or rejected completely, regardless of individual transaction results) for each operation. This is key information to design multi-threaded multi-server parallel operations, both inside Dynamics (through Batch tasks, for example) but also when designing external tools for data processing, like parallel pipelines, that will enable scaling data process for big datasets massively.

Regarding inbound integrations with Dynamics, a robust and scalable design pattern is using queue-based load leveling pattern. This way, the external system will queue data packages on a queue service like an Azure Service Bus and the destination system will get packages from the queue and process them asynchronously as fast as possible. If the system gets sudden data spikes, this data will be queued and processed by the backend as fast as possible, keeping system stability.

Designing a queue-based system for a highly concurrent environment is not trivial. It requires deep understanding on how this concurrency is managed on every system components and create some safety check to avoid processes fighting for resources. That’s where cloud services like Azure Service Bus is easier to design than creating queues on database tables (that are not designed for that). If interested, check here for example: https://learn.microsoft.com/en-us/answers/questions/222532/sql-server-how-to-design-table-like-a-queue.

Another solution that scales well is using a choreography pattern to distribute workload between the different components (data pipelines, internal Batch Jobs, data import/export jobs, …). Concurrency is a complex topic by itself that we can discuss on another post, if there is interest.

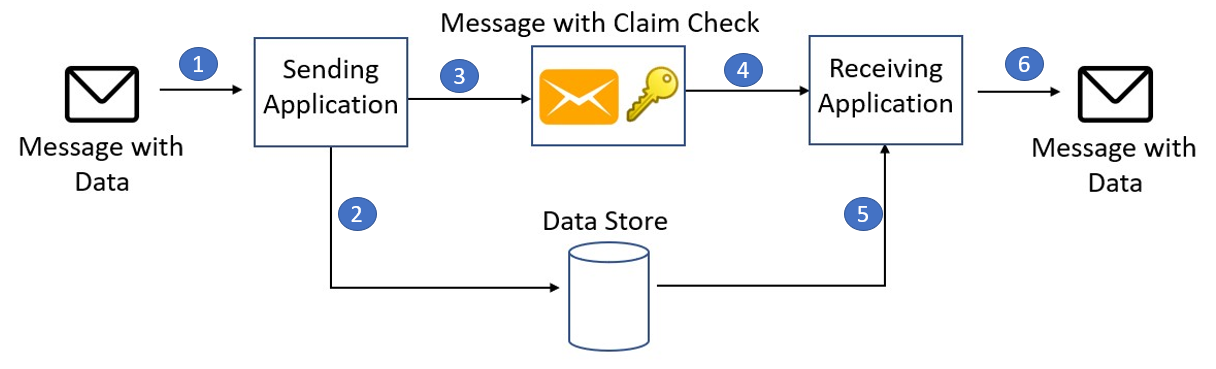

If the data packages are too big for the queue system, a claim-check pattern can be used by storing the data package temporary on an appropriate data storage and adding to the queue only the minimum required information so the destination knows there is a package waiting in the queue and where to find the full payload when needed. But again, reconsider if data packages can be pre-processed online with a data pipeline so when they get to Dynamics they are sized as digestible bites.

Check the Azure Architecture Center:

- Design patterns – Queue-Based Load Leveling

- Design patterns – Claim-Check

- Design patterns – Choreography

Outbound integrations

The main principles about outbound integrations are similar to inbound ones regarding how to process (incl. pre-processing when appropriate) and transport information between systems in an isolated and asynchronous way as a preference, but there are some particularities regarding Finance and Operations:

Again, consider creating the fundamentals of your data export processes based on standard features, like Data Management Framework. You may need to create some customizations to allow external systems to trigger Data Management Export jobs and retrieve the results (you don’t want to expose the DMF API directly to third parties, while it may work for coupled inhouse systems) but using DMF to export data will help with performance, extensibility, and maintenance, and will honor internal Dynamics security constraints.

Can your data export jobs be triggered from data that is already exported? For example, if you already have configured exporting data to Azure Data Lake you can design data pipelines that read data directly from the lake, without interacting with Dynamics at all. Data Analytics tools can be used to process and transform this data to honor different third-party requirements.

Features like Business Events are a good trigger for solutions based on Publisher-Subscriber pattern or similar messaging orchestrations. It is not advised to use business events to export data but only as a notification system. However, this notifications may include information regarding a data export package is ready to be consumed, for example, making sure when the external system comes and tries to read data it has been already processed and is ready to be consumed.

If a lot of data must be sent in a business event (again, that must be avoided as business events are not designed for data export) consider the already mentioned claim-check pattern that can be used the same way for outbound scenarios, saving data into a proper data storage and include a reference to this data into the business event payload. Validations must be put in place to guarantee the minimum number of business events are triggered when working with highly transactional entities to avoid creating too much stress on the events kernel.

- Designs that compile sets of transactions together when a ‘full package’ is ready to be exported will perform better than trying to synchronize entities real-time with external systems. Real time integrations will have a significant impact in performance on both sides and must be avoided.

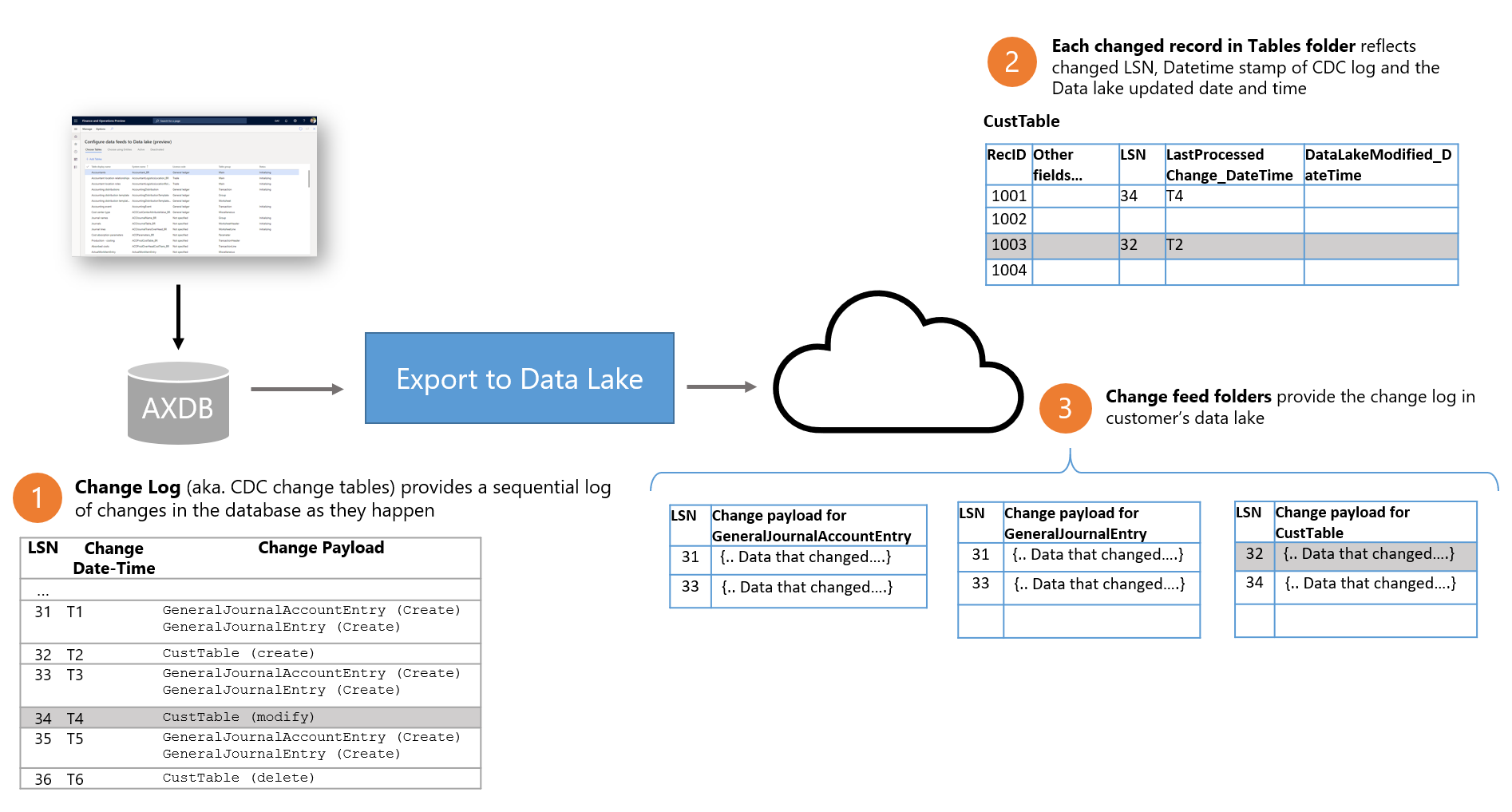

Trying to mimic real-time integrations also goes against the already mentioned idea of keeping systems isolated. If a real-time integration is a hard business requirement, try to use the standard export to Azure Data Lake and use the lake as the source for the external system. ADL have mechanisms to detect data changes that can trigger data pipelines on every change, like the Change feed folder:

Check the Azure Architecture Center:

- Design patterns – Publisher-Subscriber

- Export to Azure Data Lake overview

- Change data in Azure Data Lake.

Conclusions

From the earliest design phases with business decisions makers to the ultimate technical implementation, we must think not only how to design a system that does what is expected to do, but also that can do it at the appropriate scale and velocity. ‘It works in my computer is never enough’; it must work in the production environment with production-size data volumes and concurrency.

Design with production-level requirements in mind, like how will you monitor your system as a whole, including integrations? Who will be responsible for reacting in the event of errors? Which tools will these teams have to troubleshoot the different components? This is a critical part to “productionize” your integrations.

Often, individual system components are designed and tested in isolation, on development environments, and tested to succeed “the happy path”. But a critical system must also be evaluated as a whole, validating worst-case scenarios, stress test, involving all required components and production-like data volumes to validate performance and resilience requirements. Making changes in this regard when the system is completely finished will be more expensive and more traumatic than considering a good design from the beginning and during all implementation phases.

Keep reading:

- Design principles for Azure applications

- Use the best data store for your data

- Artificial intelligence (AI) architecture design