Low-Code Custom Data Import in Finance and Operations Apps [ENG]

Motivations

Data integrations are a fundamental requirement for cloud ERP implementations and, historically, in Dynamics have been written in X++ code to read the data, process the data, and eventually save the data on a format Dynamics can understand and use. These manually coded ETL processes also have been, and still are, a common source of problems regarding maintainability, reusability, and particularly performance, as not always have been coded to perform well with large data volumes.

As customers are progressively wiling to adapt low-code/no-code solutions that are easier to extend and require less maintenance cost, we’d like to show an example on how a typically development-heavy task can be approached as a low-code customization.

Disclaimers

(1) The final result of this proof-of-concept was a team effort. Ainhoa Menoyo created the flow in Power Automate. Damien Bird brought the Azure Function idea. I just tuned and assembled all components together getting to the end-to-end final workflow described in this article. I encourage you to also involve your wider development teams so you can get the best out of all technologies when thinking about Finance and operations requirements and customizations.

(2) I’m deliberatively not going straight deep into the technical details because I think the interesting point on this case is following the train of thought that allows you to approach your own requirements with similar strategies. The technical details are all well documented in the docs.

The case

The case we were trying to solve was quite straightforward individually, but it’s a task that is repeated in every project, oftentimes duplicating code and generating heavy workloads on specific X++ classes that are difficult to scale for large volumes. That’s it: some external party put files somewhere (in this case on a Blob Storage, but it can be anywhere Power Automate can reach) and we want to import the content of these files into F&O.

First thing that comes to our minds is using the Data Management Framework (DMF) in Finance and Operations, with its Recurring integrations API that allows queuing data import jobs from external applications. This is all standard, no customization needed.

The tricky part here is you may want to use data packages to enable importing into data entities directly, which is quite important to reduce customizations. Then we get to find a way to create the data packages ‘manually’ in Power Automate to build a file that Finance and Operations will import by using only standard features.

The format of a data package is a compressed file that contains a package manifest, a package header, and any other files for the data entities that are included. You can create a data package by creating a DMF job and exporting the current data. What we did here is to get the package header and manifest from this auto-generated package and upload them somewhere reachable by Power Automate (in this case, to the same storage account where files will be dropped). After these preparation steps, Power Automate has all the components to create new data packages by itself.

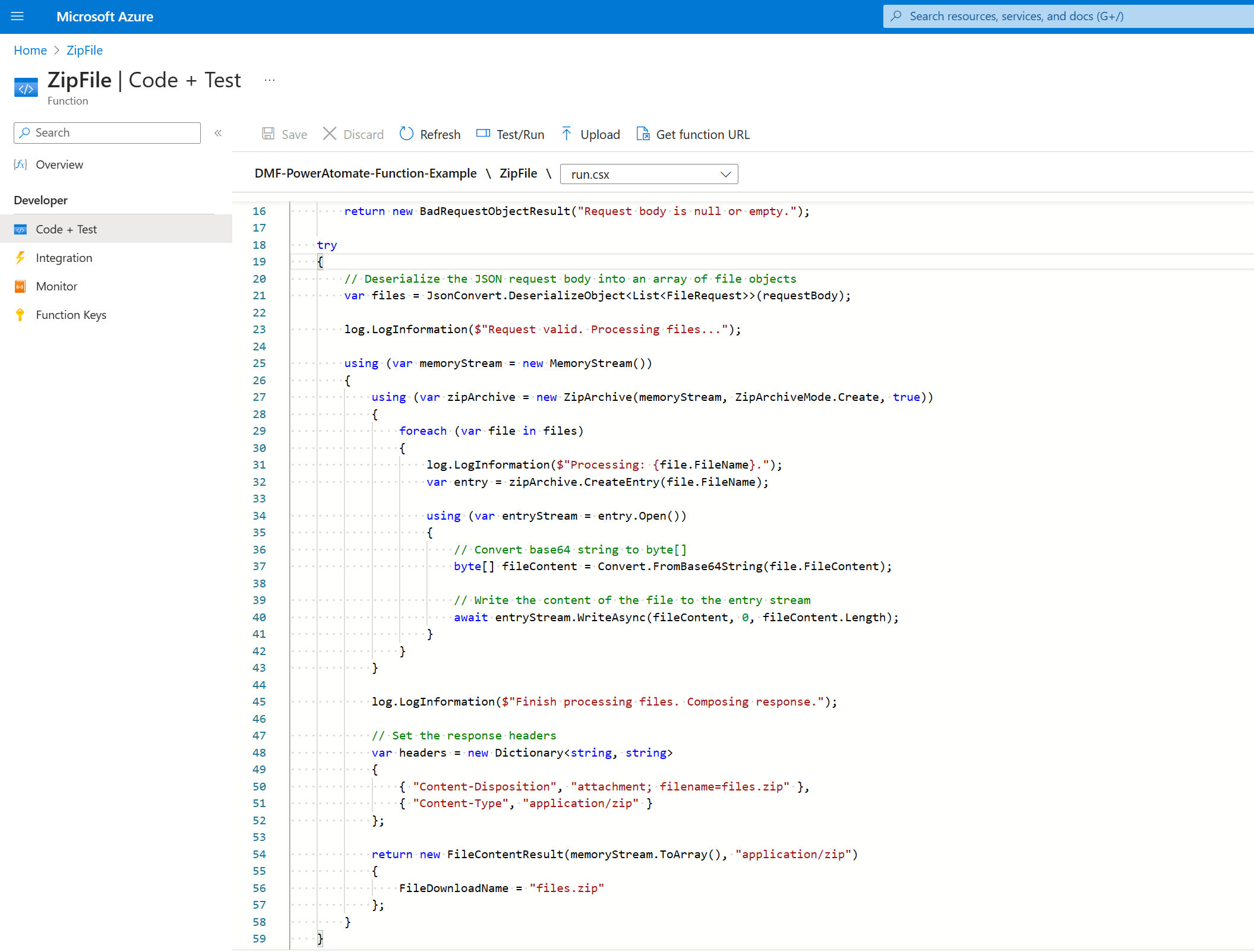

A small but important missing detail is we need to Zip some files together to build the data package, but so far, this is not something Power Automate can do by itself. We used an Azure Function written in C# to achieve this task (this is the low-code part). This function can be reused in multiple integrations where Zip files are needed.

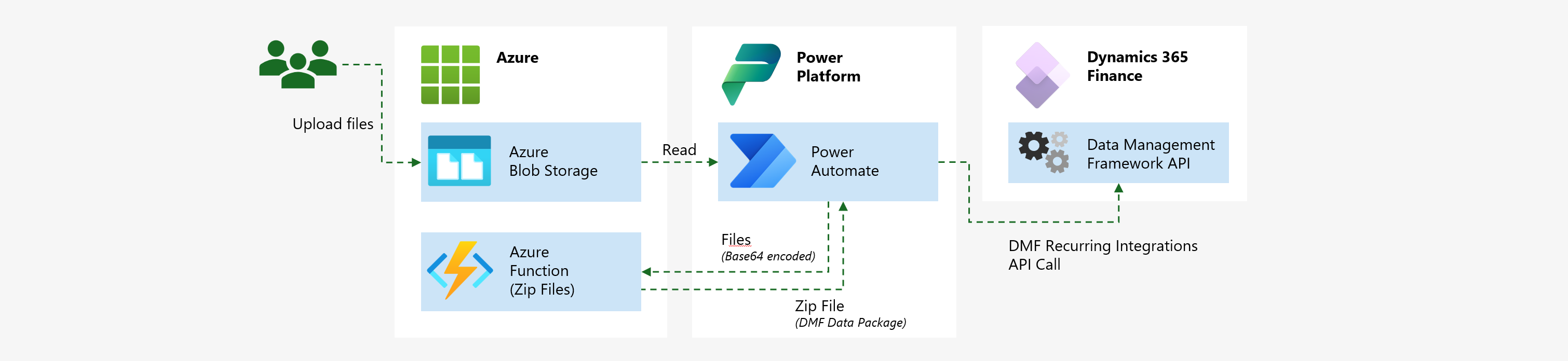

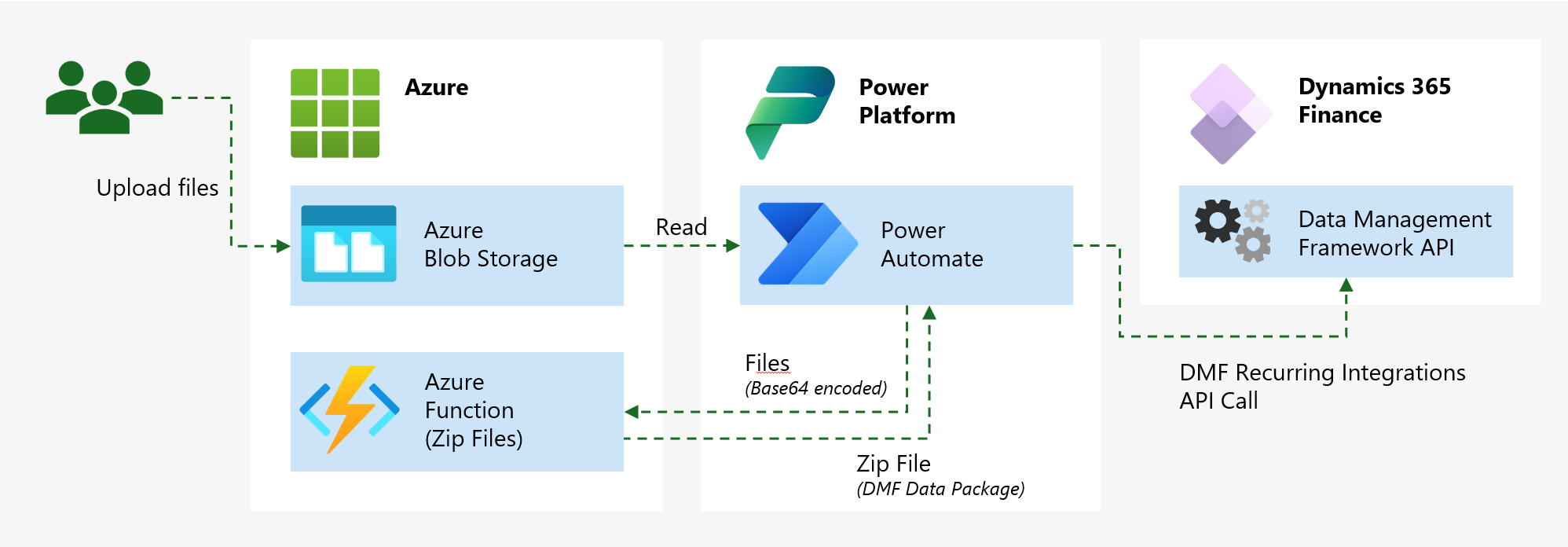

Then the overall solution looks like that:

The solution

The Finance and operations piece (all standard)

The Finance and Operations components involved are all standard DMF tasks. For simplicity we are using a standard data entity here, but every entity will work.

- Create a DMF project with your required data entities and export a data package.

- Upload header and manifest files to the storage of your choice.

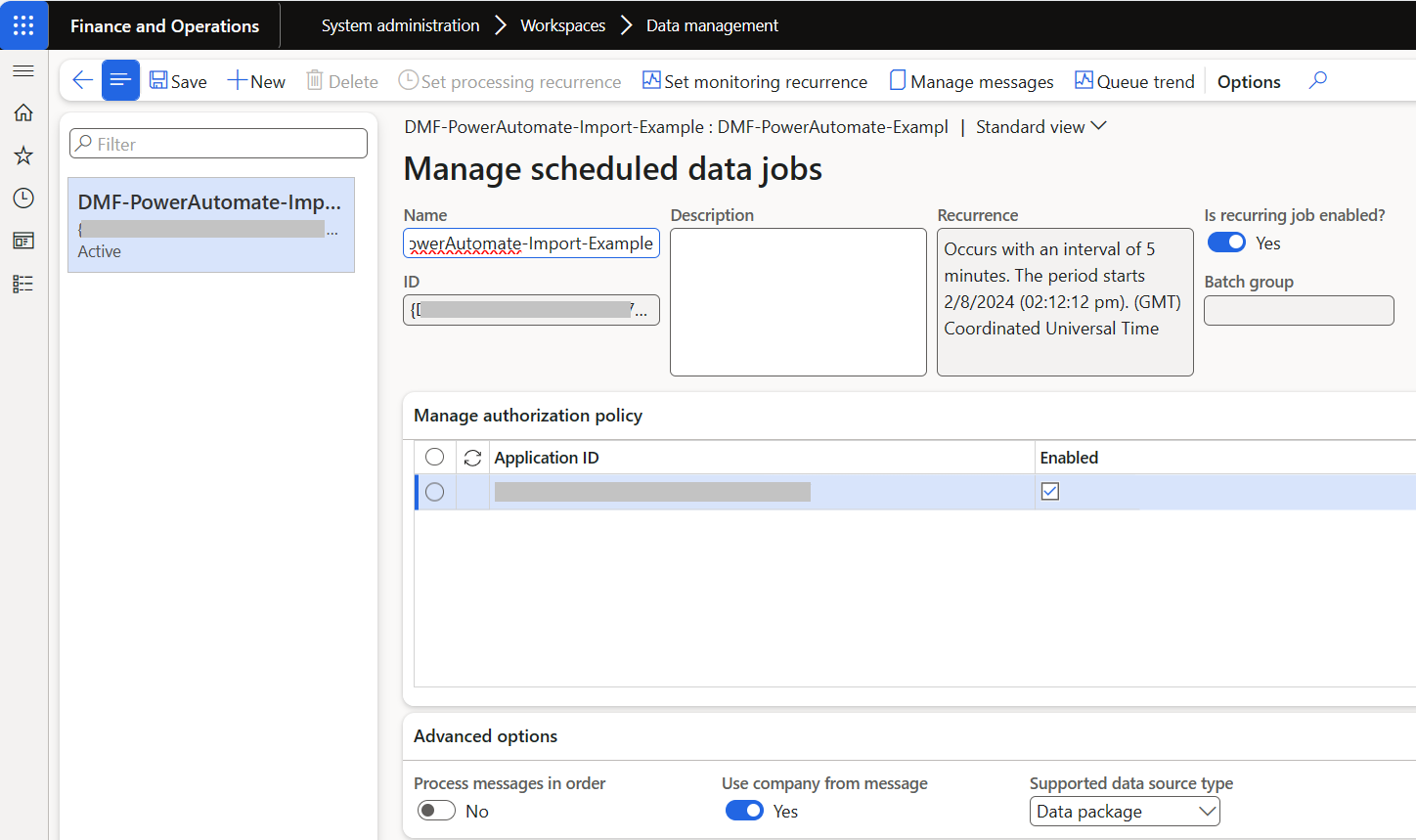

- Create a DMF Import project, with the same entity as the exported package.

- Configure a recurring integration job.

- An Application ID registered in your Entra domain is required for the job to work, that should be prepared earlier.

- Save the job ID for later, you’d need it.

More information:

- https://learn.microsoft.com/en-us/dynamics365/fin-ops-core/fin-ops/data-entities/data-import-export-job

- https://learn.microsoft.com/en-us/dynamics365/fin-ops-core/dev-itpro/data-entities/recurring-integrations

The Azure Function piece (aka the ‘low code’)

Not much to say here, it’s a simple function that gets a serialized object, deserializes it to get the original bytes, and save these bytes into a Zip file. The function itself is agnostic and can be used in many other scenarios, and most likely somebody will come with a better implementation (please share it!), but it just works.

More information:

The Power Automate piece (aka the ‘no code’)

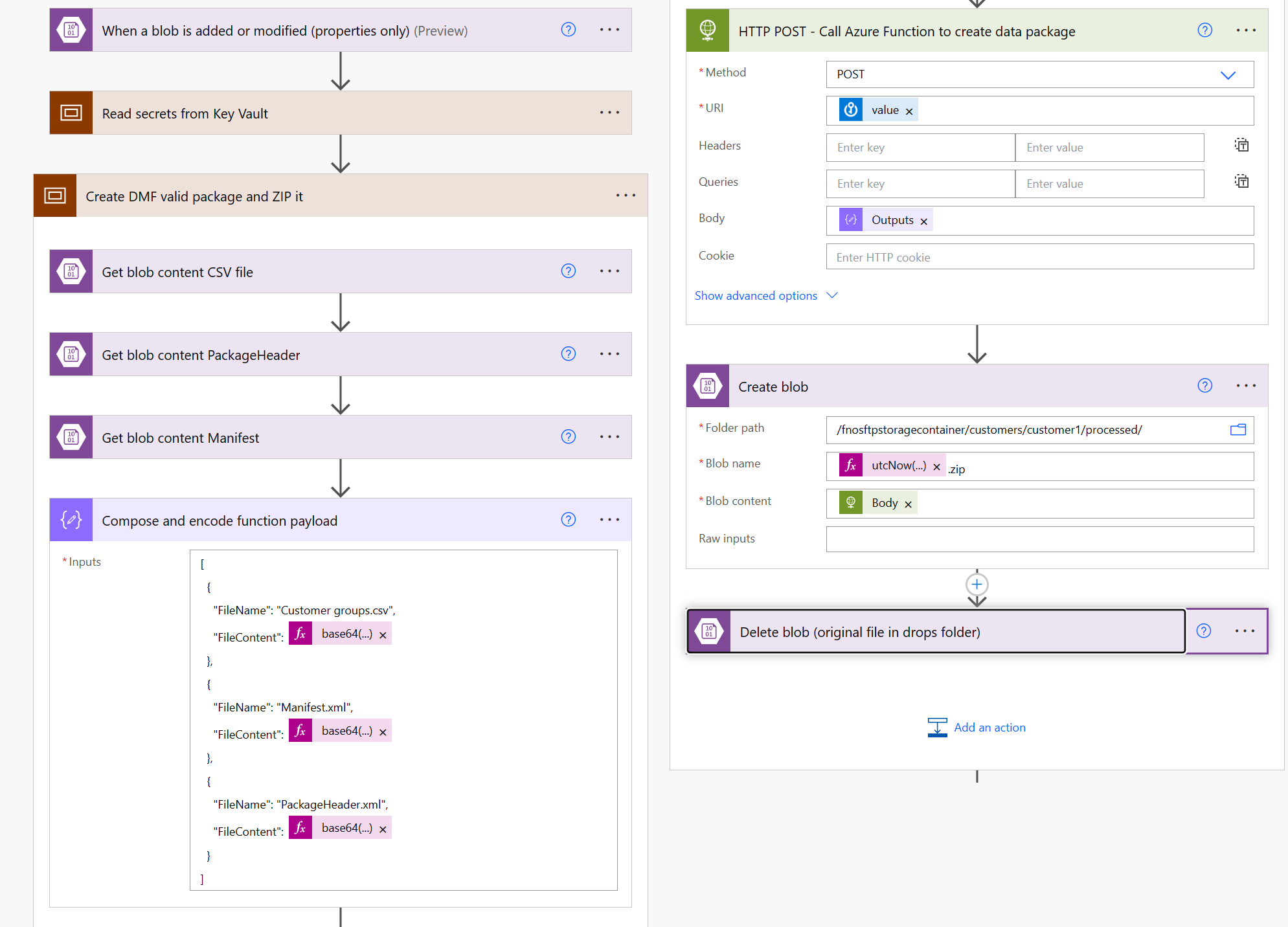

Here is where most of the interesting activities happen. This flow is split into 3 different sections, as described below:

First one gets a set of secrets from Azure Key Vault. You may want to store these elements differently, that is fine, but be sure not using secrets in plain text. You may want to provide these values as flow variables if needed, but I would recommend storing the secret ones in a Vault.

These are the values we would need for the flow to work as it is designed here (it may change on your own implementation):

- F&O base Uri

- Azure Function Uri

- For DMF recurring job:

- Application ID (I mentioned it in a previous step)

- For DMF API Authentication:

- Tenant ID

- Client ID

- Client Secret

Second section is where it reads the file that want to be imported, together with the files required to build the DMF data package, serializes it (Compose and encode), calls the Azure Function and save the Zip file in the storage account (these steps are optional, created to test the flow and serves as example).

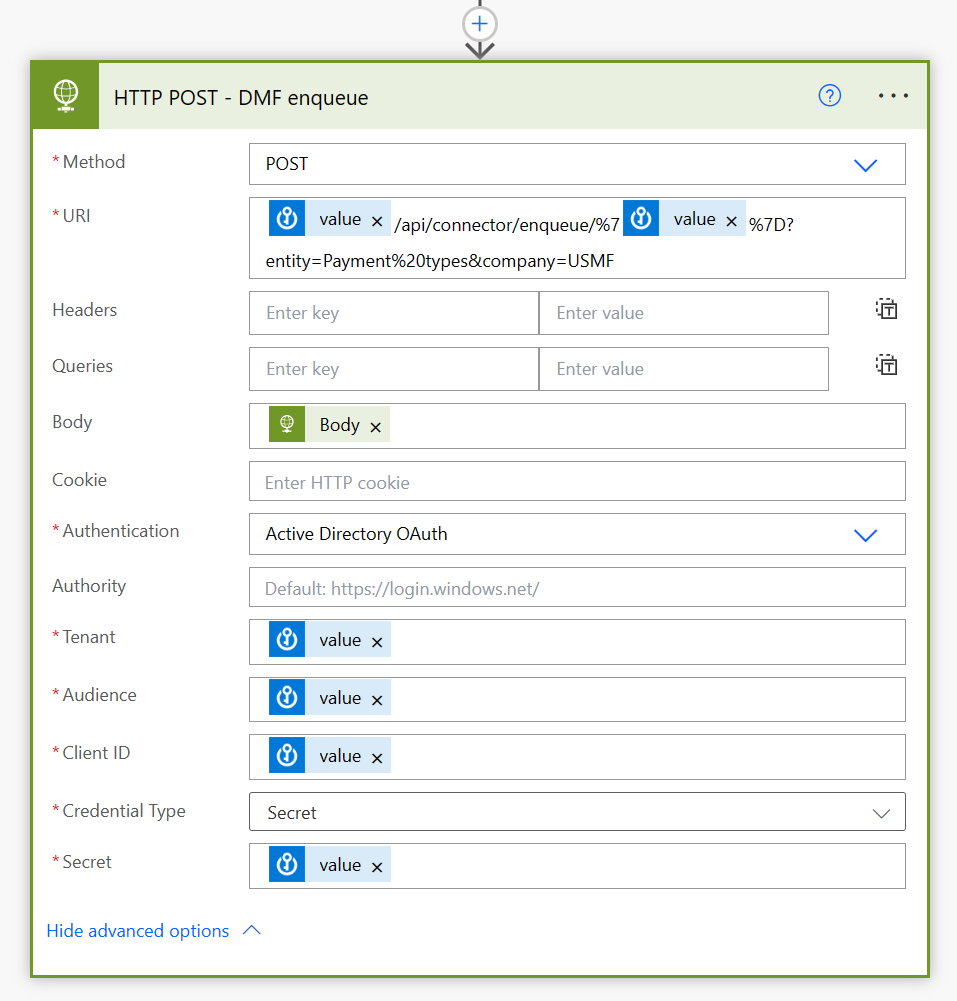

And the last step is queuing the import job into F&O with the recurring integration API, with an HTTP POST action. Here is where the values read from the Vault are used for the OAuth Authentication:

Conclusion

As mentioned in the introduction, the technical part on this solution is not particularly exceptional, but it highlights the approach trying to fit business requirements without jumping into development mode straight away and think if there are ways to achieve the same business outcomes with a solution that is easier to maintain.